In addition to the symposium on AI to assess animal welfare (Wednesday 10:00) and the symposium about AI for behavior recognition (Friday at 10:00), this symposium has a range of interesting presentations about the use of AI for measuring behavior. It takes place on Thursday 26th May from 10:00 to 12:30.

10:00 – 10:20 Eline Eberhardt – Video-Tracking Locomotor Activity of Canines in Preclinical Safety Studies

Computer vision allows video-based 24/7 monitoring of activity and behavior in canines in preclinical safety studies. Artificial Neural Networks are developed to classify poses and track activity using multi-animal detection in canines. The computer vision model is validated against a collar-fixed accelerometer (Actiwatch Mini®) used to quantify locomotor activity in larger animals, while the pose classifier is validated against manual annotations. Our model shows very good accuracy for activity and pose classification in canines.

10:20 – 10:40 Tenzing Dolmans – Real-Time Adaptive Machine Learning Systems for Personalised Bruxism Management

This paper introduces SØVN, a wearable device using in-ear sensors to detect and disrupt bruxism (teeth grinding and jaw clenching). It’s a less invasive alternative to current detection methods like EMG. The device, leveraging machine learning, monitors jaw movements and provides real-time interventions like auditory stimuli. This innovative approach offers scalable, personalized treatment for bruxism, with potential applications in other health conditions requiring real-time monitoring and intervention.

10:40 – 11:00 Jeanne I.M. Parmentier – Measuring equine respiration in the field: an exploration of microphone data and deep learning detectors

Respiration in training horses, while often overlooked, can provide useful information about their health and performance. We want to monitor respiration of harness trotters in the field by microphone and we detect respiratory events using deep learning models. We used Temporal Convolutional Networks (TCN), reaching high detection performances (F1-scores > 0.90) with only one microphone downsampled channel and 8-blocks TCN. Our work suggests that near-real-time respiration monitoring is possible for training horses despite environmental noise.

BREAK

11:30 – 11:50 Alyx Elder – Challenging Machine-Learning with Underwater Whisker Tracking in South African Fur Seals (Arctocephalus pusillus)

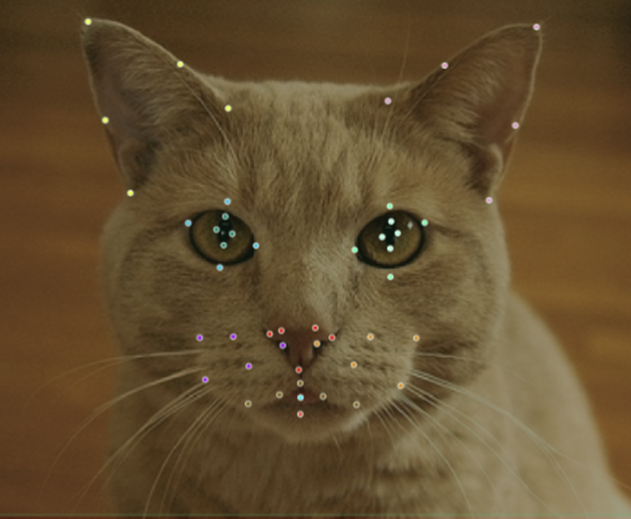

Tracking animals in challenging environments, such as underwater, has its limitations. This restricts researchers to more controlled settings, such as laboratories, and animals that can be easily manipulated, such as rodents. Here, we will present a validation of the AI-based motion tracking software DeepLabCut, compared to manually tracked landmarks, to see how it performs tracking South African fur seal whiskers underwater, while completing a discrimination task, in a zoo setting.

11:50 – 12:10 Harry Gill – Validation Of An AI-based Markerless Tracking Approach For Gait Analysis In Domestic Dogs

Current methods of kinematic gait analysis rely on the use of retro-reflective markers for capturing accurate movement data -constraining researchers to both species and setting. In this presentation, we will present how two different methods of AI-based motion tracking performed in comparison to manually tracked footage, in extracting kinematic parameters of gait from domestic dogs. Initial findings illustrate the limitations of pre-trained networks, whilst highlighting the potential of bespoke convolutional neural networks.

Measuring Behavior

Measuring Behavior